Fibre Channel over Ethernet technology aims to bridge protocols serving LAN and SAN environments.

by Patrick McLaughlin

For many years data center facilities have been served by two distinct networks: the local area network (LAN) and the storage area network (SAN). As with LANs in commercial office-type environments, Ethernet has been the transmission protocol of choice in data center LANs. Its relative cost-competitiveness compared to other LAN protocols, its ability to transmit over relatively long distances, and its 10x upgrade path, from 10 to 100 Mbits then to 1 and 10 Gbits/sec, have kept users faithful to Ethernet as the transmission method of choice in LANs. For SANs, several protocol options have been available and over the past several years Fibre Channel has emerged as a favorite.

SANs came to the forefront in the late 1990s as a preferred way to connect servers to external, shared storage devices. This evolution from direct-attached, local server storage to networked storage created two divergent data center networks: SANs for storage and LANs for application traffic.

Cost concerns dominate the list of potential barriers to the adoption of a unified fabric.

There was a clear need for two separate networks as Ethernet protocols were, at that time, not up to the task of transporting storage traffic due to performance and latency risks and a penchant for dropping data packets. SANs are intolerant of characteristics such as, and particularly, latency, which is to some extent inherent in Ethernet. Fibre Channel line rates followed a double, rather than 10x, upgrade path for several years—from 1 to 2 to 4 and then to 8 Gbits/sec between 1997 and 2008. In the mid and late 2000s, 10-Gbit Fibre Channel and then 20-Gbit Fibre Channel were adopted.

Today Ethernet speeds are quickly surpassing those of Fibre Channel and lossless performance is paving the way for 10-, 40-, and 100-Gbit Ethernet to potentially displace Fibre Channel as the foundation for SAN networking in the data center, begging the question: What will the LAN and SAN infrastructure look like in the future?

The advent of the Fibre Channel over Ethernet protocol has been held up by many as a bridge between LANs and SANs in the data center. In fact the word “bridge” has been used extensively to describe the technologies that have been developed to enable the transmission of Ethernet and Fibre Channel traffic over a single platform. The group that developed official specifications for these technologies is known as the IEEE 802.1 Data Center Bridging Task Group.

Another term frequently used to describe this joining of Ethernet and Fibre Channel is “unified fabric.” That term essentially means a single network platform, or fabric, that handles both LAN and SAN traffic.

Support and reality checks

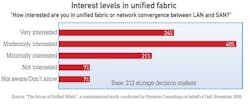

There appears to be a decent level of interest in a unified fabric among SAN operators. As we reported in an article earlier this year, a research study conducted by information technology (IT) research firm Forrester Consulting and commissioned by Dell Inc., showed that customer interest in using 10-Gbit Ethernet as the physical network or common network protocol for storage and application network traffic is on the rise.

The study, which was based on a survey of 213 storage professionals in the United States, United Kingdom, China, and the Netherlands, revealed that interest in SAN/LAN convergence is high. Sixty-six percent of respondents overall said that they are very interested or moderately interested in the concept of unified fabric or SAN/LAN convergence.

In a study conducted by Forrester Consulting for Dell last fall, 66% of storage networking decision-makers said they are either very interested or moderately interested in a unified fabric/network convergence between LAN and SAN.

In terms of storage, it remains to be seen which protocol will win-out as the de facto transport mechanism for storage traffic. The Internet Small Computer Systems Interface (iSCSI) has an entrenched installed base with Fibre Channel over Ethernet (FCoE) coming on strong as customers seek ways to connect existing Fibre Channel storage devices to the network. Regardless, the consensus is that high-speed Ethernet will serve as the underlying transport mechanism.

In a recent post on the Optical Components Blog (www.opticalcomponents.blogspot.com), industry analyst Lisa Huff pointed out that even with the anticipated rise of FCoE, other protocols such as standalone Fibre Channel as well as InfiniBand will not simply vanish. Huff explains, “Today, servers in the data center typically have two to three network cards. Each adapter attaches to a different element of the data center—one supports storage over Fibre Channel, a second for Ethernet networking, and a third card for clustering, which is probably InfiniBand. Data center managers must then deal with multiple networks. A single network that could address all of these applications would greatly simplify administration within the data center.

“Fibre Channel over Ethernet is one approach that has been proposed to accomplish this goal,” she continues. “It is a planned mapping of Fibre Channel frames over full-duplex IEEE 802.3 Ethernet networks. Fibre Channel will leverage 10-Gbit Ethernet networks while preserving the Fibre Channel protocol. For this to work, Ethernet must first be modified so that it no longer drops or reorders packets, an outcome of the array of Converged Enhanced Ethernet standards in development, IEEE 802.1p and IEEE 802.1q.

“With the implementation of FCoE, data centers would realize a reduced number of server network cards and interconnections; a simplified network; the leveraging of the best of Fibre Channel, Ethernet and the installed base of cabling; and a minimum of a 10G network card on each network element. For this to work, the various applications would be collapsed to one converged network adapter (CNA) in an FCoE/CEE environment.”

In theory the approach seems great, but Huff points out the practical, dollars-and-cents realities of considering such a deployment today. “While this would greatly simplify the data center environment, it still seems too costly to implement in every network element. One CNA with an SFP+ port costs upwards of $1,200, while the total of three separate 1-, 2- and 2.5-Gbit ports still cost less than $600. This is one of the main reasons that FCoE/CEE will only be deployed where the flexibility that it provides makes sense—which is most likely at servers on the edge of the SAN.”

Early adoption

An early adopter of FCoE (though he doesn’t want to be known as such) spoke about his motivation for using the technology and what he has experienced from it so far. Kemper Porter is a systems manager in the data center services division of the Mississippi Department of Information Technology Services.

Designing and building an efficient data center is a top concern for Porter. His department is in the midst of planning a big move to a new data center and expects to be up-and-running in six months. One of the items on Porter’s agenda is simplification. Part of that plan includes a transition to converged networking.

“We simply want to clean up when we get to the new building. We know we are going to have a very high rate of growth. We had to set a new precedent and deployment pattern,” says Porter.

Porter and his team essentially function as a service provider to various state agencies, provisioning servers and IT resources to application developers across the state.

Porter envisions a massive proliferation of VMware virtual machines (VMs) deployed as building blocks that look and feel like mainframes, all of which connect to centralized storage via CNAs with centralized data backups and disaster recovery at the server level.

CNAs consolidate the IP networking capabilities of an Ethernet network interface card (NIC) with the storage connectivity of a Fibre Channel host bus adapter (HBA) via FCoE onto a single 10 Gigabit Ethernet interface card.

Porter is currently using three single-port CNAs in conjunction with dual Cisco Nexus 5000 Series FCoE-capable network switches.

The CNAs are being used in a test and development capacity; Porter is waiting until the move to the new data center to put them in critical roles. He says the move to CNAs is one of necessity.

These illustrations provided by the Fibre Channel Industry Association depict data center network environments before and after the implementation of data center bridging/Fibre Channel over Ethernet.

“When you have a 3U-high server it does not have an integrated switch and you wind up with a proliferation of network cards – six Ethernet connections per server and two for Fibre Channel,” he says. “Having all of these network cards creates a spider’s den of all these cables going in and out of the servers. It creates an excellent opportunity for physical mistakes and makes the process of troubleshooting more difficult.”

Despite his aversion to so-called bleeding edge technologies, Porter is confident that the CNAs with FCoE will meet his future needs.

“I believe touching it and working with it is the only way to get your confidence up. I would not describe myself as an early adopter. This is just about as much fun as I can handle. If I were not moving to a new data center I probably would not be doing this,” Porter says.

The old way of doing things is a non-starter for Porter. His rack servers can comfortably house 77 VM instances physical server, while maintaining mainframe-like reliability, but none of it would be possible without minimizing network adapters and port counts.

Porter now runs two-to-three connections to each server. “It brings the complexity way down. We will still have our Fibre Channel infrastructure with one connection rather than two per server and we still have redundant pathways because we connect to two Nexus switches,” he says.

Converged networking is the way of the future, at least for Porter. “We will now buy CNAs to put in all of our future VMware servers. That is a fact. The technology is solid enough that we are going that way,” he says.

Patrick McLaughlin is chief editor of Cabling Installation & Maintenance. Prior reporting from Kevin Komiega, former contributing editor, was also used in this article.

Past CIM Articles