Denser, more compact technologies result in new enclosure packaging trends.

Phil Dunn / Rittal Corp.

Network technology load density at data centers and colocation facilities has been increasing. As recently as two or three years ago, 50W per square foot was considered comfortable. Today, manufacturers are designing data equipment rated at 75W and 150W per square foot, and even higher because server vendors are introducing equipment as small as 1U in height-particularly with servers aimed at the Internet Service Provider (ISP) market.

While this equipment is smaller, it still requires the same amount of power. In one example, a new 1U server is rated at approximately 190W, so 42 of these servers in a single cabinet would produce over 8,000W of heat.

The idea that technology is getting smaller, faster, and better is certainly one that can't be argued. But with this trend, as well as others in server technology, there are many network cabinet design considerations for installers to think about.

Dimension issues

The trend towards denser rack-mount servers has affected network enclosure design, resulting in a need for deeper cabinets. For instance, standard cabinets are at least 36 inches (900 mm) deep or even deeper. One particular server is 40 inches (1,000 mm) deep and requires a 48-inch- (1,200 mm) deep cabinet, so the growth in depth is forcing a change in cabinet design.

Height, on the other hand, is often dependent on the room where the cabinets are located. Newer buildings are designed with tall network cabinets in mind, so you are beginning to see 9-foot network cabinets in these installations. With installations in existing buildings, there may be building shortcomings (no raised floor, low ceiling, concrete pillars in the room, etc.) that can influence the traditional 78-inch height of the network cabinet. Therefore, it is important to have a variety of choices in heights and depths when evaluating the cabinet design.

There are also significant design considerations inside the enclosure. Front and rear rails with the Electronic Industries Alliance hole patterns are included to provide a mounting surface for the rack-mount equipment, which is standard among enclosure manufacturers. It is important, however, that the rail is universal, providing sufficient clearance to accommodate the different mounting styles of various servers.

Another key to mounting rack-mount devices is having the right brackets for any server. For example, 28-inch and 24-inch-deep servers mounted in the same cabinet on both the front and rear rails need depth-adjustable brackets so that the 24-inch server can reach the back rail.

Sometimes, a network cabinet not only includes server equipment but also computer equipment, such as keyboards and monitors. Design issues that arise are space and accessibility. Many network enclosures offer various options when mounting these devices into the enclosure. For example, eyboards and monitors can be installed on telescopic shelves that pull out of the enclosure. While the hole pattern is the same on the 19-inch rails, the type of hole will vary depending upon the manufacturer.

A square-hole pattern that uses captive cage nuts for mounting components has several advantages. For instance, sometimes the fixed-hole patterns on the server and cabinet rail do not line up perfectly. But if a captive nut is used to attach the server to the rail, there is "play" that will allow for an easier fit. Or, when installing directly to the rail, an installer can inadvertently screw a bolt crooked and strip the hole in the rail, requiring that the rail be retapped. But with a cage nut, a stripped hole can be easily replaced with a new nut, saving time in the installation process.

The space between the front and rear rails, called the "U pocket," is best reserved for rackmount equipment. To maximize space, many customers are installing equipment such as power distribution units outside of this area. If possible, you should also install power strips, fan panels, lights, and other accessories outside the "U pocket" to maximize the internal mounting area.

As your network grows, enclosures may need to be installed in various customer premises or locations-network room, colocation room, factory floor, and maybe even a Zone 4 seismic area (see sidebar, "Network cabinets in a high seismic risk facility"). Make sure your cabinet design offers installation flexibility in these different locations. More importantly, make sure the standard design offers a platform that can be used in the various locations with only minor changes, such as reinforced brackets or new doors. By establishing an infrastructure with one type of enclosure, you can standardize on one unit across the board, thereby making ordering, familiarity with the product, and installations easier.

Building shortcomings have to be looked at, too. Low ceilings, obstructions such as concrete pillars, and narrow doorways can cause problems if not considered early in the design phase.

Security is always a design issue, especially when considering the cost of today's server technology. An important design element here is the lock type. The more options available, whether mechanical or electronic, the better your chances of protecting against unwanted access. Also, consider monitoring units that provide system security and keep an eye on important control systems and computer networks. Features can include:

- Prevention against unauthorized access via swipe card/keypad authorization and lockout;

- Remote monitoring of sensors, UPS/power supply, and vandalism detection; and

- Climate control/thermal management functionality.

Keeping your cool

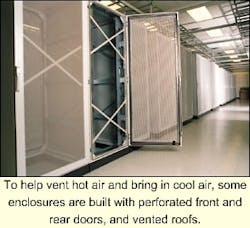

Several cooling device designs for network cabinets that have been proven to dissipate heat include perforated front and rear doors and vented roofs. Air flow for servers is normally designed to blow front-to-back, but other designs exist-right to left or bottom to top. A crucial climate design issue is venting hot air and bringing in cool air. Raised floors certainly are one of the best ways to bring in cool air to the cabinet, but existing facilities may not be able to accommodate raised floors. Cold air can be blown in from ceiling vents, but if the gap is too large between the ceiling and the cabinets, then the cool air will never sufficiently cool the enclosure. You may also want to consider ducting to direct the cool air to the enclosures.

As server density goes up, so does the heat. With today's server cabinet heat loads sometimes as high as 8,000W, effective enclosure cooling strategies are critical for maintaining system reliability. Server enclosures with a perforated front/rear door and a perforated roof help cool room air to circulate through the enclosure. In densely-packed enclosures, however, additional fan cooling systems will be required.

For example, consider a 78-inch H x 24-inch W x 36-inch D enclosure, with 4,000W of electronics heat dissipation, situated in a 68°F room temperature, and requiring a 95°F desired internal enclosure temperature. It would need approximately 460 CFM (cubic feet per minute) of air throughput. Although many servers have their own internal fans, the airflow through the enclosure would likely not be adequate without supplemental cooling.

Traditional fan cooling methods, such as roof-mounted devices, are based on the "chimney effect" and are less effective due to the potential for air "short cycling" from the upper portion of the doors to the roof. New server fan assemblies are designed to be mounted to the rear enclosure door, and these should:

- Mount easily to the door stiffeners;

- Come with two high-performance fans designed to move air in densely packed enclosures;

- Exhaust air out the rear of the enclosure, thereby assisting with front to back venting of most components;

- Be height-adjustable within the cabinet to provide maximum user flexibility (by locating it exactly where it is needed); and

- Offer shallow, 1.75-inch depth that maintains maximum usable space inside the enclosure.

It is critical to consider the static pressure level when looking at fans because this will give you an indication of how they perform under actual conditions. And the higher the CFM rating, the more hot air the fan can exhaust. Do not limit the fan installation to the enclosure roof. See if other mounting possibilities exist that could target specific hot spots or provide more effective airflow. Advanced thermal modeling using computational fluid dynamics software can be used to simulate enclosure heat loading and identify thermal problems prior to field installation.

Looking at the future, a 78-inch high cabinet could have 42 1U servers mounted in it, each generating 190W of heat. That's more than 8,000W of total heat in the cabinet! Granted, today an average figure for heat in an enclosure can be around 1,500-2,000W, but the trend towards packing more and more equipment into the cabinet will make heat dissipation a major concern.

More compact servers also means more of them in a cabinet with more cables, as well as an increased need for cable management. Cables that are bunched up in the back of the network enclosure can cause heat build-up by blocking air flow. If you have deep servers, there might not be much room to perform cable management. In some cases, it might be best to set aside a separate cabinet where your cables can be organized.

Standards-based infrastructure is crucial, so during the installation, get the cable structure established because cables are expensive to replace. Try to only change the network equipment when updating the network. With a standard system, you use a straight-through cable scheme and eliminate the need for special cable set-up.

Server technology will change, so consider all the possibilities, present and future, that can affect the design of your enclosures. When your servers do get more compact and deeper, generate more heat, or change in other ways, you will have the infrastructure in place to grow and maintain the network.

Phil Dunn is product manager at Rittal Corp. (Springfield, OH). He can be reached by contacting the company at (937) 399-0500. James Khamish of XO Communications also contributed information in the sidebar.

Network cabinets in a high seismic risk facility

XO Communications (formerly NEXTLINK) is a broadband company providing services to businesses in more than 51 markets, and has many colocations across the U.S. The company has quickly initiated voice and data communication systems with fiber optic technology.

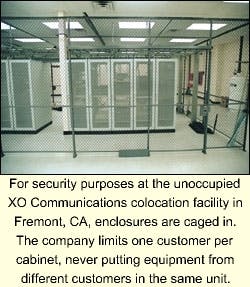

In the Bay Area, their fiber optic ring covers cities in four major counties (San Francisco, Alameda, Santa Clara, and San Mateo). Its colocation site in Fremont, CA is one of the company's largest affiliate facilities of its kind (more than 20,000 sq.ft.), and now houses a network room with about 100 Rittal enclosures-with 400 more planned in the future.

The site is home to data communications equipment for more than 30 customers, and James Khamish, project/applications engineer, comments, "Most of the customers here are ISPs with a variety of different servers, each with different amperage. Each customer delivers their equipment to us with their specific power requirements, load requirements, and space requirements, and we in turn design the cabinets to fit their needs." From a design perspective, it is easier for XO to choose one enclosure that will accommodate every customer application and a variety of servers, including those from Sun, Dell, and Compaq, as well as even the deeper servers.

In California, the number one requirement for colocation enclosures is high seismic risk Zone 4 compliance. Company guidelines require telecommunications equipment suppliers to test their products to NEBS (Bellcore, Technical Reference, NEBS GR-63-Core Network Equipment Building Systems Generic Equipment Reference) seismic requirements. This standard is one that XO Communications adopts for its network enclosures, according to Khamish.

Testing simulates a networking environment where the enclosure is loaded with 1,000 lbs. of weight; 50% is distributed below the center of gravity and 50% is located above the center of gravity. Khamish says other Zone 4 cabinet features that are important include anchors that bolt the enclosure to the floor in a freestanding configuration, and shock-dampening components.

Khamish points out that with servers up to 100 amps, and with customers at times needing up to 15 servers mounted into the cabinet, climate control concerns also arise. Perforated doors on the enclosures are needed to move hot air out of the enclosure, but with today's network enclosures seeing substantial heat loads, XO Communications continually looks at what else they can do to remove the hot air. For example, they're beginning to use fan trays in the middle of the enclosure for more air movement.

Since the Fremont facility is an unoccupied site, security is another major design consideration. The company limits one customer per cabinet, never putting equipment from different customers in the same unit. But besides ensuring that the customer's equipment is housed securely, it is necessary that the customer have access to the system.

A major configuration design issue for the Fremont affiliate is the building's power. The colocation building was previously occupied by an electronics retailer, but based on trends in the server industry, the company sees a need for more power and is making plans accordingly. They're adding an emergency generator and have maximized the DC power plant to 4,800 amp.

XO Communications' network enclosures are equipped with various data comm servers and other equipment installed in a floor stanchion-supported cable rack environment. The floor stanchion system supports all external cabling to the freestanding cabinets. The external cable management system includes a fiber raceway and an AC cable management system.