Appropriate use of active equipment helps designers optimize network bandwidth.

Russell Driver and Zoltan Nadj / Hewlett-Packard Australia Ltd.

In today's networks, there are several names for network equipment. Three basic types are hubs, switches, and routers, and what is known as a "switching router" is a combination of the switch and router. Hubs, switches, and routers work within the first, second, and third layers of the Open Systems Interconnection (OSI) network model, respectively.

In the first networks, workstations were all connected by coaxial cable in a bus topology. The drawbacks of such a network are obvious. Certainly, the physical configuration of a workgroup is limited by the bus formation. Additionally, because signals transmitted across wire degrade over distance, the bus length is constrained. A not-so-apparent drawback is that the workstations on a bus are connected in a daisy-chain manner, so that a cable break anywhere affects virtually the entire network. No networking equipment was necessary in this type of connected environment.

Hubs

Hubs, which facilitate network connectivity in a star topology, provide two major advantages over the older bus topology. First, a cable break affects only a single node rather than the entire network, and second, hubs accommodate the ubiquitous un-shielded twisted-pair cable.

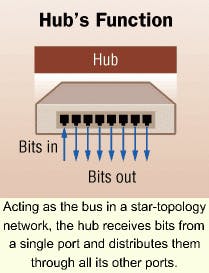

Implementing a star topology requires a device such as a hub at the center of the star to emulate the function of a bus-allowing any device to hear the transmissions of any other device connected to the central device. Hubs operate on Layer 1 of the OSI model and repeat the transmissions arriving on one port to all ports.

Switches

Unlike hubs, switches make intelligent forwarding decisions rather than merely repeating all transmissions to all ports. Switches are transparent on a network, often characterized as plug-and-play. In other words, if you replaced the hub with a switch in a small network, the network would continue to function, but with increased capacity.

When the switch receives a frame on a port, it examines the frame's header to learn the source address. Once completing this examination, the switch knows the port address of the appropriate device. The switch adds that information to its forwarding table, which essentially is a database of all the devices with known source addresses and their port addresses. By listening to network traffic, the switch quickly learns the locations of all devices on a network.

With each received frame, the switch can take one of three actions. It forwards the frame to the destination port if the destination address exists in the forwarding table and is on a different port than the port on which the frame arrived. It filters the frame if the destination address is on the same port on which the frame arrived-meaning the source and destination devices are on the same segment. This filtering process is a bandwidth-saver. Finally, the switch can flood the frame if the destination address does not exist in the forwarding table. Flooding means the switch forwards the frame to all ports other than the one on which it arrived.

Switches flood broadcast frames, because in normal network operation, no frame should be received by a switch that has the broadcast address in the source address field. The broadcast address is always treated as an unknown address and is therefore flooded. Recently, however, vendors have developed what is known as Layer 3 traffic controls, which enable switches and networks in general to handle broadcast messages more judiciously.

Switches use bandwidth more efficiently than do hubs. When forwarding a frame from one port to another, the switch follows carrier-sense multiple access with collision detection (CSMA/CD) procedures when transmitting the frame on the destination port. By replacing a hub with a switch, it's possible to divide a network into multiple segments-or collision domains-and lower the use of each collision domain. Additionally, the switch will filter, or drop, all traffic between two devices in the same collision domain, thus ensuring traffic local to a port never reaches any devices on the switch's other ports. And when traffic is forwarded, it is sent only to the destination port.

Switches can simultaneously support more than one conversation between ports. For instance, data entering through port 1 can be forwarded through port 5 at the same time that data entering through port 6 can be forwarded through port 4. In fact, the switch can support as many conversations as it has pairs of ports. By contrast, a hub can support only one conversation at a time because it forwards data that arrives through one port to all its ports at the same time.

The switch's ability to support multiple simultaneous conversations effectively multiplies the bandwidth of the network connected to the switch. For instance, if four workstations send data to four servers simultaneously, the 10-Mbit/sec switch can allocate 10 Mbits/sec to each of the connections, providing an effective bandwidth of 40 Mbits/sec. By contrast, under the same conditions, the hub must divide its total bandwidth of 10 Mbits/sec among the four conversations. Consequently, each conversation receives far less bandwidth than it would in a switched environment.

The switch's support of full-duplex networking and speed changes further enhances its ability to efficiently use bandwidth. Full-duplex transmission is only possible when the switch is connected directly to an end node or another switch. Speed changes-the ability to support ports of different speeds-are possible because of a switching feature called store-and-forward. In the store-and-forward process, the switch buffers an entire packet and checks it for errors before retransmitting it. Consequently, the switch can receive data from a 10-Mbit/sec connection and forward it to a 100-Mbit/sec connection. The switch also can receive data from 10 separate 10-Mbit/sec connections and forward the data to a single 100-Mbit/sec connection.

Routers

In contemporary networks, routers perform two major functions: They connect Layer 3 networks to each other and assume responsibility for knowing how to reach any network on the internetwork. Using this knowledge, routers forward packets from the source device and network to the destination device and network. Routers also support multiple paths, meaning they can learn more than one route to networks and devices.

When a router receives a data packet from the source device, it inspects the destination address, determines the best route to that destination network, and forwards the packet along the best route.

Historically, routers were used to interconnect local area networks (LANs). But because of their highly involved forwarding process, routers are slow and many of today's networks use switches to interconnect LANs. Switches are faster than routers because their forwarding process is far less complex. If a router and switch were implemented on the same processor, the switch would have a higher forwarding rate.

But that does not mean a router can never be as fast as a switch. Like any device, the switch is subject to limiting factors other than its own speed and power. Specifically, the number of packets that can transmit through any media type is limited to a maximum rate. No matter what capabilities the switch has, it cannot facilitate transmission any faster than the media can handle that transmission. In such a situation, a router could achieve a similar forwarding rate if it were based on a more powerful processor. But the router would be expensive because it would require more processing power to do the same job.

Routers are still used to interconnect wide area networks (WANs) because the routers' complex forwarding process allows them to transfer packets across non-Ethernet-based WAN links. Occasionally, in situations requiring sophisticated filtering or security features, routers are still used to connect LANs.

Just as switches delimit collision domains, routers delimit broadcast domains. The router prevents one network's broadcasts from reaching other networks. In fact, controlling broadcasts is one of the primary reasons to deploy routers in a network.

Switch routers

To address the problems posed by the latencies inherent in routers, several network-equipment vendors have introduced routing switches. Essentially, they are routers with capabilities that enable them to achieve forwarding rates usually achieved only by switches. The two primary capabilities of routing switches from most vendors are "route once, switch many" and "virtual interfaces."

- Route once, switch many. Routing switches perform the complete conversation setup associated with routers only one time for each conversation. When a routing switch receives the first packet of a conversation, it performs the necessary software operations, including route lookup, filtering, and prioritization processing. The routing switch caches the routing information so the rest of the conversation is forwarded-actually, switched-in hardware.

- Virtual interface. With traditional routers, it's not possible to assign a single subnet to more than one router interface. In other words, each port on a router is a different interface. With routing switches, however, administrators can group any number of ports together to service a single subnet. That's especially useful in situations in which a particular subnet requires large amounts of bandwidth. When ports are grouped on a routing switch, traffic traveling between those ports is switched at Layer 2 of the OSI model.

Routing switches are best deployed in networking environments that require backbone interconnect devices to provide the speed of switches and security of routers. Consequently, routing switches are a promising solution for the chronic router overloads that plague many large-scale networks.

In recent years, the traffic patterns of many LANs have shifted, so that backbone bandwidth gradually has become more important than workgroup bandwidth. A few years ago, approximately 80% of LAN traffic was local to the workgroup, with 20% ever crossing the network backbone. Now, however, the ratio of workgroup-to-backbone traffic often is reversed; in many networks, 80% of traffic traverses the backbone. The growth of intranets and the consolidation of network servers have largely spurred this network-traffic development.

As more network traffic crosses the backbone, network designers must develop new strategies for providing backbone bandwidth. When most traffic was localized within the workgroup, the link to the backbone only had to be big enough to carry the small percentage of traffic that crossed the backbone. Now, because the majority of traffic crosses the backbone, more bandwidth is required at the backbone link.

In response to this need, network designers frequently are using interconnect switches and routing switches to build faster backbones with higher bandwidth than the desktop connectivity devices they serve. The situation is analogous to the traffic flows in major cities. Neighborhood streets-like the links connecting workgroup nodes-are relatively narrow, designed to carry sparse local traffic. Secondary streets designed to carry heavier traffic loads connect neighborhoods. At each subsequent stage, traffic levels and the streets' carrying capacity increase until, finally, major portions of the city are connected by multilane highways designed to carry large amounts of traffic.

Technological developments, including the development of 10/100-Mbit/sec and gigabit-speed switches as well as new strategies for linking switches, have enabled the development of high-bandwidth backbones. Switch-linking strategies in particular have enabled designers to develop high-bandwidth backbones without resorting to collapsed backbones, which are contained entirely in single devices such as switches or routers. Before the development of high-speed linking technologies, designers most often could achieve high backbone bandwidth only by using the backplane of a single device. When devices were linked in this type of environment, they inevitably slowed the backbone because the links could never be as fast as the devices' backplanes.

Russell Driver is the senior presales network consultant for Hewlett-Packard Australia Ltd., and Zoltan Nadj is business development manager of Procurve Network Products at Hewlett-Packard Australia Ltd.

This article was originally published in the October/November 1999 issue of Cabling Installation & Maintenance Australia/ New Zealand.