Comparing cabling and networking options for making the high speed migration from 40 to 100G

By Qing Xu, Belden

Global Internet Protocol (IP) traffic has been increasing rapidly in the enterprise and consumer segments, driven by the growing number of internet users and connected devices, faster wireless and fixed broadband access, high-quality video streaming, and social networking.

To support fast-growing cloud-based services, data centers are being built globally to provide necessary computing, storage, and content delivery services to enterprise and customer users.

During the last few years, the data center market has become one of the most active, fastest-growing markets driving technical innovation. Data center operators are striving to build faster, denser, bigger, more-cost-effective and more-power-efficient data center infrastructure that can be scalable, sustainable, and resilient.

Based on estimates from leading cloud service providers and market research firms, we experience the following for each two-year cycle.

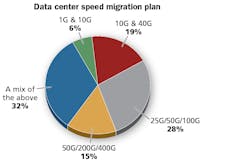

A survey conducted by Belden and Mission Critical magazine shows more than a quarter of enterprise data centers have begun planning for a migration of their access network to 25G and of their aggregate/core network to 100G.

Time for 100G Ethernet

Since 2016, the “Super 7” cloud computing companies—Amazon, Facebook, Google, Microsoft, Alibaba, Baidu and Tencent—have been deploying 100G Ethernet in their data center locations. The footprints of these hyperscale data centers have already surpassed the size of a few football fields, and they continue to grow substantially to accommodate massive amounts of servers and switches.

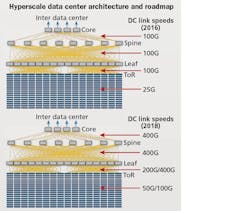

25/50G servers and 100G switch ports have become ubiquitous in hyperscale data centers, replacing previous 10G servers and 40G switches. This speed migration has boosted overall system throughput by 2.5x with small incremental costs.

According to a forecast by Dell’Oro Group, total 100G switch port shipments will outnumber 40G switch port shipments in 2017-2018. And according to market research firm LightCounting, 100G transceiver module and switch port shipments was projected to grow by 10x in 2017 compared to the previous year, ramping up from shipment of 50,000 ports in 2015 to shipment of 5 million ports in 2017. Further down the road, the industry estimates that 200G/400G Ethernet port shipments will start in 2018, and reach to around 10 million between 2019 and 2021.

Based on our recent survey, conducted in partnership with Mission Critical magazine, many enterprise data centers have started planning for access network migration to 25G and aggregate/core network migration to 100G. Some organizations have already started to consider 50G/200G/400G down the road.

Layer 0 options for 100G Ethernet

As data center speeds go up, the performance of Layer 0 (i.e. the physical media for data transmission) becomes increasingly critical to ensure link quality.

Deployment of 10G and 40G (4x 10G) Ethernet in data centers has seen multimode optics (multimode transceivers and multimode fibers) as the most common and cost-effective solution. Multimode optics can support up to 400 meters in reach, satisfying most data center reach requirements. 10G and 40G singlemode optics, on the other hand, are mostly used for interbuildinginterconnects.

As the bandwidth-hungry data center space grows bigger and bigger, however, 25G and 100G (4x 25G) Ethernet deployment has triggered many technical innovations and cost-optimized products to satisfy different data center topologies and applications. QSFP28 transceiver modules are the most popular solution that enables Ethernet switches populated with 100G ports (e.g. 32x 100G Top of Rack switch). Many vendors have also introduced SFP28 modules to support 25G Ethernet to servers.

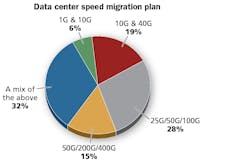

With one exception, duplex and parallel multimode physical medium dependent (PMD) reaches top out at 400 meters. The one exception is 40G-SWDM4, which employs short-wave division multiplexing and achieves slightly more than 400 meters using OM5 fiber.

Multimode and singlemode link distance limits

Multimode fiber (MMF) link distance is limited by the transceiver link loss budget and modal and chromatic dispersions. In the IEEE 802.3 and Fibre Channel standards, newer fiber types, such as OM4 and OM5, can support longer maximum reach thanks to higher effective modal bandwidth (EMB). In the latest ANSI/TIA-568.3-D standard, only OM3, OM4, and OM5 are recommended for new premises cablinginstallation.

Duplex (e.g. two-fiber LC duplex) and parallel (e.g. 8-fiber or 12-fiber MPO) are both popular MMF cabling solutions.

Unlike MMF, singlemode fiber (SMF) has virtually unlimited modal bandwidth, especially when operating at the zero-dispersion wavelength 1300-nm range, where material dispersion and waveguide dispersion cancel one another. Typically, the singlemode laser has a much finer spectral width; actual reach limit is not bounded by differential mode delay (DMD) as it is in MMF cabling.

For data center and LAN applications, SMF link distance is mainly limited by the transceiver link loss budget. Note that, in the latest ANSI/TIA-568.3-D standard, only OS2 SMF is recommended for new premises cablinginstallation.

Historically, 10-km long reach (LR) singlemode transceiver modules have been widely used for metro and large local area networks. With the cloud ecosystem expansion, the high cost of 10-km LR transceivers became a bottleneck to supporting 100G Ethernet deployment in data centers that started in 2015.

Designed for large-footprint data center deployment, cost-optimized singlemode transceivers, such as 100G CWDM/CLR4 (coarse wave-division multiplexing) transceiver modules that support up to 2-km reach in duplex fiber pair, and the PSM4 (parallel-singlemode) that supports 500-m or 2-km maximum reach in parallel fiber cable, have become the winners in cloud data centers.

Different types of singlemode transceivers have been developed to achieve a good balance between performance and cost. Following are the most common transceiver types that have been developed to support different reaches and link-loss budgets in data centers and LANenvironments.

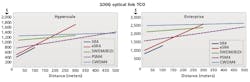

The total cost of ownership (TCO) of a 100G optical link can vary significantly depending on the transceiver and cabling architecture used. The costs also vary between hyperscale and enterprise data centers.

100G SMF vs. MMF cost comparison

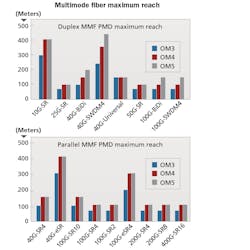

There are a variety of 100G optical interconnect solutions for data center applications. While singlemode transceivers are more expensive than multimode transceivers, singlemode fiber is less expensive than multimode fiber, which makes it more suitable for longer-distance links. The table elaborates on the technologies and their main characteristics, including fiber cabling and transceiver costs.

It is important to compare the total cost of ownership (TCO) of 100G optical links for different singlemode and multimode options. The TCO estimation is for one direct 100G optical link, including the cost of two transceivers and the fiber cables that interconnect them.

- For a reach of less than 100 m, a multimode SR4 solution using MPO-12 MMF cabling will continue to be the most cost-effective technology.

- For reaches longer than 100 m, singlemode PSM4 and CWDM4 are more cost-effective in greenfield installations. eSR4, a proprietary multimode solution can support up to a 300-m link distance in OM4; it allows the reuse of an installed MMF cabling system (e.g. 40G eSR4 upgrade to 100G eSR4).

- A 100G BiDi/SWDM solution is new to the market; when paired with OM5 MMF, it can support an extended reach in duplex cabling systems. Because 40G BiDi has been a big market success, we expect 100G BiDi/SWDM to be a competitive contender as the 100G ecosystem becomes more mature.

Note that there is a difference in cost structure between the hyperscale data center segment and the enterprise data center segment. Some of those differences are outlined here.

The higher the speed and longer the distance of a data center network, the more likely singlemode fiber is required or preferred. Multimode cabling serves the needs of intrabuilding connections.

In hyperscale data centers, 100G core as well as spine-and-leaf speeds, and 25G top-of-rack speeds were the norm in 2016. In 2018, speeds are ratcheting up to 400G in the core and spine, 200/400G in the leaf, and 50/100G in top-of-rack architectures.

100G migration plan

Singlemode solutions are needed for large-footprint data centers; multimode solutions dominate applications requiring reaches of less than 100 m thanks to their relative low costs and high technical feasibility. According to LightCounting’s report, more than 50 percent of 100G optical links in data centers use transceiver modules with 500-m and 2-km reach, including PSM4 and CWDM4. This trend will continue for 200G and 400G Ethernet deployment starting in 2019. Also according to LightCounting, followed by multimode SR4, singlemode PSM4 and CWDM4 are the most-shipped 100G optical transceiver products in 2016 and 2017.

100G in hyperscales—Unlike traditional data centers that use three-tier architectures (access, aggregation, core), modern hyperscale data centers deploy leaf-spine architectures that all require a massive amount of crossconnect to support high system service availability through network function virtualization (NFV). Direct-attach copper is the dominant interconnect solution for servers to Top-of-Rack switch interconnects, for a reach of up to 5 meters. Because most new hyperscale data centers are greenfield installations, singlemode optics are being massively deployed for the links above Top of Rack level, which support very large system scalability and longer link distances.

To support longer reach and more-flexible data center architecture, singlemode fiber infrastructure has become the de-facto solution in large-sized data center facilities. As per comparison, standard multimode transceivers (e.g. 1000Base-SR4) and OM4 multimode fiber cable fall short of reach. They can only support a maximum link distance of 100 to 300 meters.

100G in enterprise data centers—In many enterprise data centers, although 40G may meet immediate business needs, many organizations are looking for an economic and futureproof migration path toward 100G and beyond. Considering historical MMF dominance in data centers, in addition to multimode transceiver cost advantages, multimode fiber cabling systems will continue to be the most popular cabling solution for enterprise data centers for a long time.

Ninety percent of multimode fiber links in data centers are below 100 m in reach; therefore, many installed-base brownfield MMF cabling systems can be reused to support future speed migration without shortening link distance. New multimode transceivers have also been developed to be backward-compatible with installed OM3 and OM4 cabling systems.

When it comes to new greenfield fiber cabling deployment, multimode transceiver vendors have already shown products that can support up to 400G Ethernet, especially with promising new technology that can support multiple wavelengths over the same MMF thread (i.e. short wavelength divisionmultiplexing).

When the greenfield installations of today turn to the brownfield installations in the next upgrade cycle, the installed-base MMF infrastructure will continue to support bandwidth growth smoothly.

Qing Xu is technology and applications manager for optical fiber systems with Belden (www.belden.org).